Integrating With Games

Ultralight offers developers the ability to display fast, modern HTML UI within games and other GPU-based apps.

Let's dive into the main things you need to know to integrate Ultralight into an existing game or game engine.

API Overview

When integrating into a game you should use the low-level Ultralight API, not the AppCore API.

The AppCore API is intended for standalone desktop apps and runs its own message loop which may conflict with the run loop in existing games.

Use the API headers found in <Ultralight/Ultralight.h>, and operate on the Platform, Renderer, and View objects directly.

///////////////////////////////////////////

// High level overview of using the API //

///////////////////////////////////////////

#include <Ultralight/Ultralight.h>

using namespace ultralight;

RefPtr<Renderer> renderer;

void InitLibrary() {

// Do any custom config here

Config config;

Platform::instance().set_config(config);

Platform::instance().set_gpu_driver(my_gpu_driver);

Platform::instance().set_file_system(my_file_system);

// Create the library

renderer = Renderer::Create();

}

void Shutdown() {

renderer = nullptr;

}Platform Singleton

The Platform singleton can be used to define various platform-specific properties and handlers such as file system access, font loaders, and the gpu driver.

Ultralight provides a default GPUDriver (an offscreen bitmap driver that uses OpenGL, more on that below), a default FontLoader, but no FileSystem.

Look at the ultralight-ux/AppCore repo on GitHub for reference implementations for GPUDrivers, FontLoaders, and FileSystems.

When integrating into a game, you'll probably want to use your own GPUDriver and FileSystem implementation.

A custom FileSystem implementation can be used to load encrypted or compressed resources from your native resource loader.

Renderer Singleton

The Renderer singleton maintains the lifetime of the library, orchestrates all painting and updating, and is required before creating any Views.

You should set up the Platform singleton before creating this.

Only one Renderer should be created per process lifetime.

View Class

Views are containers for displaying HTML content.

RefPtr<View> view;

void CreateView() {

// Create an HTML view

view = renderer->CreateView(500, 500, false);

// Load some HTML

view->LoadHTML("<h1>Hello World!</h1>");

}They have an associated RenderTarget that you can use to get the underlying texture ID when using a custom GPUDriver (more on that below).

Using the Bitmap API

Ultralight comes with a default, OpenGL-based GPUDriver that renders directly to an offscreen bitmap. This driver is used if you don't provide your own.

Should you use the Bitmap API or a custom GPUDriver?The Bitmap API is a lot more convenient to get started but has slightly higher latency and overhead.

Also, since it is OpenGL-based, you can't use it with an existing OpenGL-based game (on Windows at least, the WGL has a conflict when multiple GL contexts are created on the same thread. We will be switching the Bitmap API to a multithreaded, CPU-only GPUDriver in the future.)

You should use the Bitmap API if you are integrating Ultralight into a game engine that doesn't allow you to emit native GPU driver calls and isn't OpenGL-based.

Bitmap API

Every time you call Renderer::Render(), each View is drawn to an offscreen FBO that is then blit to a CPU bitmap which you can access via View::bitmap()

You can check if the bitmap is dirty by calling View::is_bitmap_dirty(), the dirty state is cleared each time you call View::bitmap().

Here's some pseudo-code of how you would use this in your engine:

void RenderFrame() {

renderer->Render();

if (view->is_bitmap_dirty()) {

RefPtr<Bitmap> bitmap = view->bitmap();

void* pixels = bitmap->LockPixels();

// Use 'pixels' in your engine here, update on-screen texture, etc.

bitmap->UnlockPixels();

}

}Using a Custom GPUDriver

Ultralight can emit raw GPU geometry / low-level draw calls to paint directly on the GPU without an intermediate CPU bitmap. We recommend this integration method for best performance.

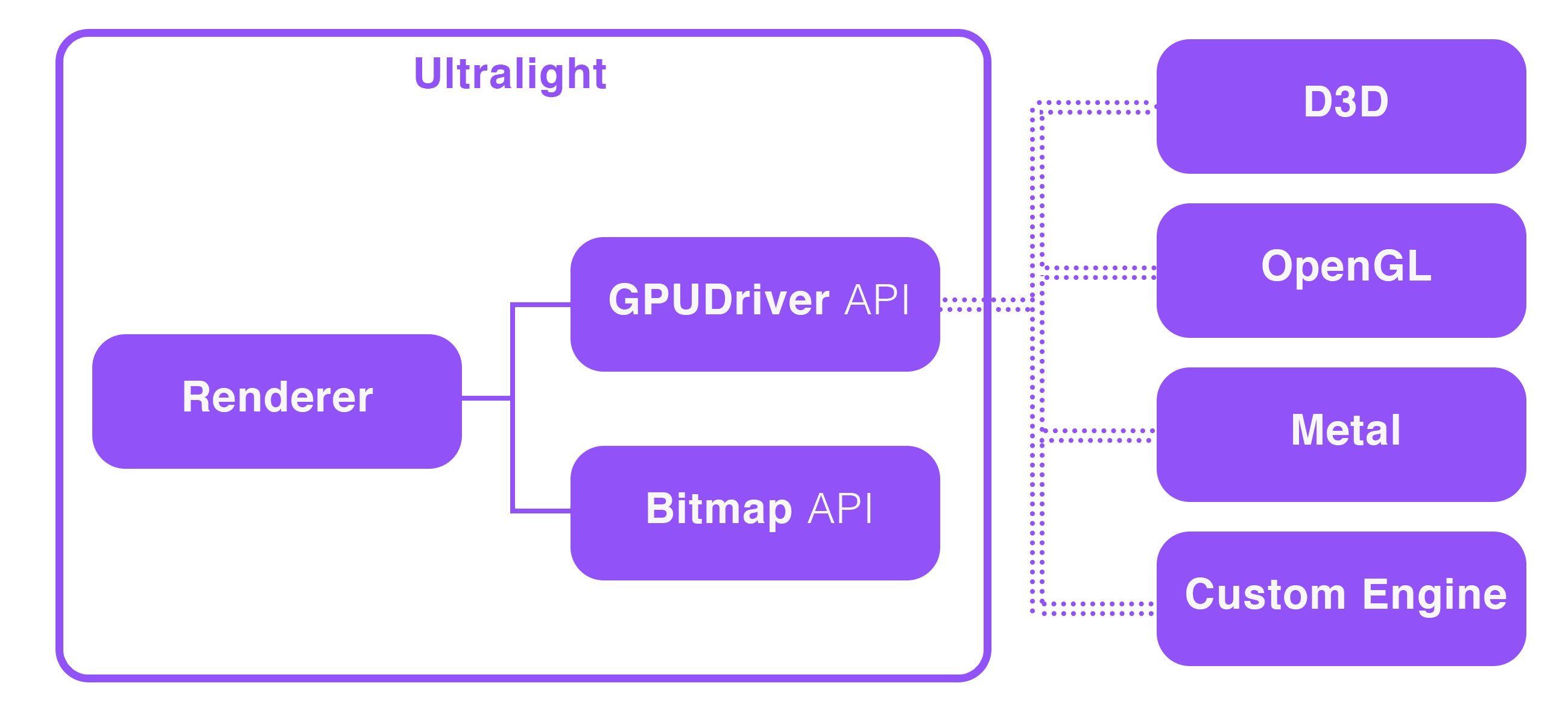

Virtual GPU Architecture

Ultralight was designed from the outset to be renderer-agnostic. All draw calls are emitted via the GPUDriver interface and expected to be translated into various platform-specific GPU technologies (D3D, Metal, OpenGL, etc.).

This approach allows Ultralight to be integrated directly with the native renderer of your game.

GPUDriver API

The first step to using a custom GPUDriver is to subclass the GPUDriver interface.

You'll need to handle tasks like creating a texture, creating vertex/index buffers, and binding shaders.

All GPUDriver calls are dispatched during Renderer::Render() but drawing is not performed immediately-- Ultralight queues drawing commands via GPUDriver::UpdateCommandList() and expects you to dispatch these yourself.

- For porting to Direct3D 11, see: AppCore/src/win/d3d11

- For porting to Direct3D 12, see: AppCore/src/win/d3d12

- For porting to OpenGL, see: AppCore/src/linux/gl

- For porting to Metal, see AppCore/src/mac/metal

Shader Programs

Ultralight relies on vertex and pixel shaders for CSS transforms and to draw things like borders, rounded rectangles, shadows, and gradients.

Right now we have only two shader types: kShaderType_Fill and kShaderType_FillPath.

Both use the same uniforms but have different vertex types.

Here are the reference implementations for Direct3D (HLSL):

Vertex Shader (HLSL) | Pixel Shader (HLSL) | |

|---|---|---|

| ||

|

If your engine uses a custom shader language, you'll need to port these files over.

Device Scale

You can set a custom device scale (DPI scale) for your GPUDriver by setting device_scale_hint when setting up your Config. By default it is 1.0

Config config;

config.device_scale_hint = 2.0;

Platform::instance().set_config(config);Just beware that you'll need to compensate for this custom device scale in your GPUDriver implementation when translating logical units back to pixel units (most notably with GPUState::viewport_width and GPUState::viewport_height).

Blending Modes

Ultralight uses a custom blend mode-- you'll need to use the same blending functions in your engine to get color-accurate results when dispatching DrawGeometry commands with blending enabled.

For reference, here is the render target blend description for the D3D11 driver:

D3D11_RENDER_TARGET_BLEND_DESC rt_blend_desc;

ZeroMemory(&rt_blend_desc, sizeof(rt_blend_desc));

rt_blend_desc.BlendEnable = true;

rt_blend_desc.SrcBlend = D3D11_BLEND_ONE;

rt_blend_desc.DestBlend = D3D11_BLEND_INV_SRC_ALPHA;

rt_blend_desc.BlendOp = D3D11_BLEND_OP_ADD;

rt_blend_desc.SrcBlendAlpha = D3D11_BLEND_INV_DEST_ALPHA;

rt_blend_desc.DestBlendAlpha = D3D11_BLEND_ONE;

rt_blend_desc.BlendOpAlpha = D3D11_BLEND_OP_ADD;

rt_blend_desc.RenderTargetWriteMask = D3D11_COLOR_WRITE_ENABLE_ALL;Render Loop Integration

Within your application's main run loop, you should:

- Call

Renderer::Update()as often as possible - Call

Renderer::Render()once per frame. - After calling

Renderer::Render(), check ifGPUDriverhas any pending commands, and dispatch them by callingGPUDriver::DrawCommandList(). - Get the texture handle for each

Viewand display it on an on-screen quad.

void UpdateLogic() {

// Calling Update() allows the library to service resource callbacks,

// JavaScript events, and other timers.

renderer->Update();

}

void RenderFrame() {

renderer->Render();

if (gpu_driver->HasCommandsPending())

gpu_driver->DrawCommandList();

// Do rest of your drawing here.

}Binding the View Texture

Ultralight doesn't actually draw anything to the screen-- all Views are drawn to an offscreen render texture that you can display however you wish.

To get the texture for a View, you'll need to call View::render_target():

RenderTarget rtt_info = view->render_target();

// Get the Ultralight texture ID, use this with GPUDriver::BindTexture()

uint32_t tex_id = rtt_info.texture_id;

// Textures may have extra padding-- to compensate for this you'll need

// to adjust your UV coordinates when mapping onto geometry.

Rect uv_coords = rtt_info.uv_coords;Once you have this texture you can display it on-screen as a quad or projected onto some other geometry in-game.

Passing Mouse Input

Passing mouse input to a View is pretty straightforward-- just create a MouseEvent and pass it to View::FireMouseEvent():

MouseEvent evt;

evt.type = MouseEvent::kType_MouseMoved;

evt.x = 100;

evt.x = 100;

evt.button = MouseEvent::kButton_None;

view->FireMouseEvent(evt);Just make sure that all coordinates are localized to the View's quad in screen-space, and that they are scaled to logical units using the current device scale (if you set one).

Passing Keyboard Input

Keyboard events are broken down into three major types:

KeyEvent::kType_RawKeyDown-- Physical key pressKeyEvent::kType_KeyUp-- Physical key releaseKeyEvent::kType_Char-- Text generated from a key press. This is typically only a single character.

You should almost always useKeyEvent::kType_RawKeyDownfor key presses since it lets WebCore translate these events properly.

You will need to create a KeyEvent and pass it to View::FireKeyEvent():

// Synthesize a key press event for the 'Right Arrow' key

KeyEvent evt;

evt.type = KeyEvent::kType_RawKeyDown;

evt.virtual_key_code = KeyCodes::GK_RIGHT;

evt.native_key_code = 0;

evt.modifiers = 0;

// You'll need to generate a key identifier from the virtual key code

// when synthesizing events. This function is provided in KeyEvent.h

GetKeyIdentifierFromVirtualKeyCode(evt.virtual_key_code, evt.key_identifier);

view->FireKeyEvent(evt);In addition to key presses / key releases, you'll need to pass in the actual text generated. (For example, pressing the A key should generate the character 'a').

// Synthesize an event for text generated from pressing the 'A' key

KeyEvent evt;

evt.type = KeyEvent::kType_Char;

evt.text = "a';

evt.unmodified_text = "a"; // If not available, set to same as evt.text

view->FireKeyEvent(evt);Multithreading

The Ultralight API is not thread-safe at this time-- calling the API from multiple threads is not supported and will lead to subtle issues or application instability.

The library does not need to run on the main thread though-- you can create the Renderer on another thread and make all calls to the API on that thread.

Updated 8 months ago